概述

对于Ceph全新的存储引擎BlueStore来说,RocksDB的意义很大,它存储了BlueStore相关的元数据信息,对它的理解有助于更好的理解BlueStore的实现,分析之后遇到的问题;

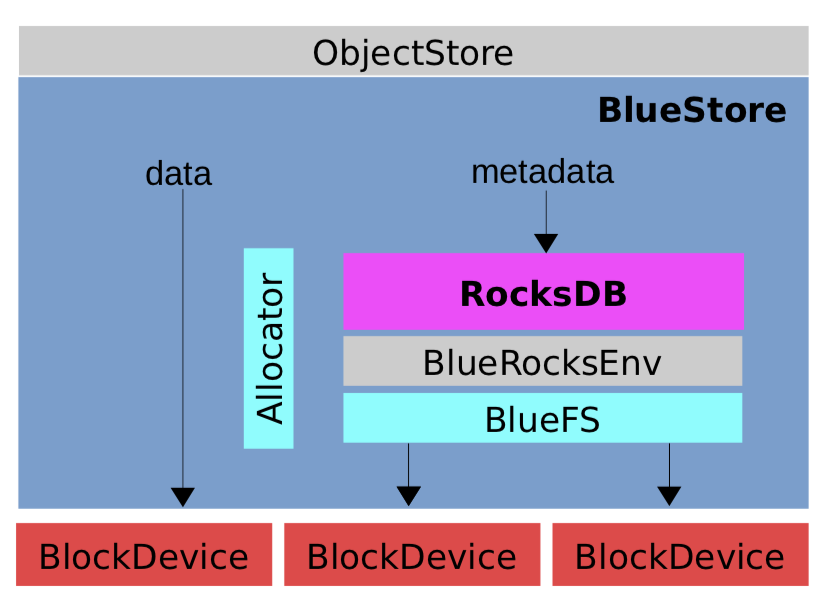

BlueStore架构

BlueStore的架构图如下,还是被广泛使用的一张:

如上图所示,BlueStore的几个关键组件中,RocksDB对接了BlueStore的metadata信息,本文抛开别的组件,详细描述RocksDB在这里存储的信息以及其实现;

BlueStore结构体定义

Ceph里BlueStore的定义和主要数据成员如下:

1 | class BlueStore : public ObjectStore, public md_config_obs_t { |

几个关键的数据成员如下:

1) BlueFS

定义:BlueFS *bluefs = nullptr;

支持RocksDB的定制FS,只实现了RocksEnv需要的API接口;

代码里在_open_db()里对其初始化:

1 | int BlueStore::_open_db(bool create) |

2) RocksDB

定义:KeyValueDB *db = nullptr;

在BlueStore的元数据和OMap都通过DB存储,这里使用的是RocksDB,它的初始化也是在_open_db()函数中:

1 | int BlueStore::_open_db(bool create) |

3) BlockDevice

定义:BlockDevice *bdev = nullptr;

底层存储BlueStore Data / db / wal的块设备,有如下几种:

- KernelDevice

- NVMEDevice

- PMEMDevice

代码中对其初始化如下:

1 | int BlueStore::_open_bdev(bool create) |

4) FreelistManager

定义:FreelistManager *fm = nullptr;

管理BlueStore里空闲blob的;

默认使用的是:BitmapFreelistManager

1 | int BlueStore::_open_fm(bool create){ |

5) Allocator

定义:Allocator *alloc = nullptr;

BlueStore的blob分配器,支持如下几种:

- BitmapAllocator

- StupidAllocator

默认使用的是 StupidAllocator;

6) 总结:BlueStore的mount过程

在BlueStore的 mount过程中,会调用各个函数来初始化其使用的各个组件,顺序如下:

1 | int BlueStore::_mount(bool kv_only) |

RocksDB的定义

RocksDB的定义如下,基于KeyValueDB实现接口:

1 | /** |

基类 KeyValueDB 的定义如下,只罗列了几个关键的基类定义:

1 | /** |

在代码中,使用RocksDB的常用方法如下:

1 | KeyValueDB::Iterator it; |

RocksDB里KV分类

BlueStore里所有的kv数据都可以存储在RocksDB里,实现中通过数据的前缀分类,如下:

1 | // kv store prefixes |

下面针对每一类前缀做详细介绍:

1) PREFIX_SUPER

BlueStore的超级块信息,里面BlueStore自身的元数据信息,比如:

1 | S blobid_max |

2) PREFIX_STAT

bluestore_statfs 信息

1 | class BlueStore : public ObjectStore, |

3) PREFIX_COLL

Collection的元数据信息,Collection对应逻辑上的PG,每个ObjectStore都会实现自己的Collection;

BlueStore存储一个PG,就会存储一个Collection的kv到RocksDB;

1 | class BlueStore : public ObjectStore, |

4) PREFIX_OBJ

Object的元数据信息,对于存在BlueStore里的任何一个Object,都会把其的struct Onode信息(+其他)作为value写入RocksDB;

需要访问该Object时,先查询RocksDB,构造出其内存数据结构Onode,再访问之;

1 | class BlueStore : public ObjectStore, |

5) PREFIX_OMAP

Object的OMap信息,之前存储在Object的attr和Map信息,都通过PREFIX_OMAP前缀保存在RocksDB里;

6) PREFIX_DEFERRED

BlueStore Deferred transaction的信息,对应数据结构定义如下:

1 | /// writeahead-logged transaction |

7) PREFIX_ALLOC

FreelistManager相关,默认使用BitmapFreelistManager;

1 | B blocks |

8) PREFIX_SHARED_BLOB

Shared blob的元数据信息,因为blob的size比较大,有可能上面的多个extent maps映射下来;

RocksDB tool

ceph提供了一个命令来获取一个kvstore里的数据:ceph-kvstore-tool,help如下:

1 | root@ceph6:~ |

使用示例:

1 | root@ceph6:~ |